Visual effects is used for the integration of live-action footage with artificially created realistic objects, characters and environments.Professionals currently use some soft wares to create almost magical imagery to add a sense of realism.Mostly in dangerous,impractical, or impossible to shoot situations we need to do vfx.

Softwares used for visual effects

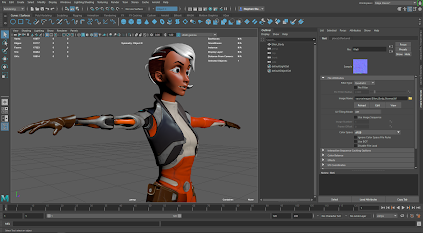

Autodesk 3ds max

Maya vs 3ds max

3DS Max is better for modeling, texturing, meshing models and mobile games development Maya- Maya is better for Video games and animation.The initial release for Maya was Feb 1988,3DS Max the initial release was around 1996.

(Language availability)

Maya is available in English, Japanese, and Chinese languages

3DS Max in English,Brazilian,German, French,Portuguese,Japanese, Chinese, and Korean.

Maya is available for Windows, Linux, and Mac OSX operating system.

3DS Max is available for Windows operating systems(OS) only.

Zbrush

Other tool to create high resolution model is zbrush.ZBrush is a digital sculpting tool used for creating a high-resolution model.ZBrush used for sculpting, rendering and texturing alone while Maya used for animation, VFX modeling, rendering and lighting.Autodesk Maya is a complete package that provides modeling, simulation, rendering, and visual effect, motion graphics, and animation.

Mocha

PowerMesh tool in mocha enables a powerful sub-planar tracking process for visual effects and rotoscoping. Now Mocha Pro can track warped surfaces and organic objects and it is now highly used in digital makeup shots.

PowerMesh is simple to use and faster than most optical flow based techniques.Use PowerMesh to to drive roto shapes with less keyframes.You can export mesh vertices to Null layer in After Effects,Nuke tracking,Alembic format for Flame, C4D etc.

Roto with Less Keyframes

Mocha’s masking workflow reduced manual keyframes.Small no of keyframes are enough to rotoscope because mocha automatically track the rotoscoped subject.

X-Splines and Bezier splines are available to rotoscope in mocha with magnetic edge-snapping assistance which makes rotoscope easier.Using Area Brush tool we can brush a particular portion to mask.It helps to create detailed mask shapes .

To mask organic moving objects like musculature, skin, fabrics, and more PowerMesh in mocha is used

Stabilize Camera or Object Motion

Mocha can stabilize even toughest highly shaking shots.We can eve export stabilized tracking data or render a stabilized clip.

Warp stabilisation tool inside after effects and premiere is used by many post technicians for simple stabilisations.With Power-Mesh tracking enabled, the Stabilize Module can produce an inverse-warped flattened surface for paint fixes.Original motion is easily propagated back to the original.Even other compositing tools has rotoscopy tools but still in vfx studio rotoscopy artists work with mocha.

Nuke

Academy Award for Technical Achievement in 2001 was won by NUKE

Nuke is right now one of the most popular "photoshop for moving images" or, "compositing" software.

Nodal toolset

It has more than 200 creative nodes and it delivers everything you need to tackle the diverse challenges of digital compositing,nuke has many creative tools which includes industry-standard keyers, rotoscope, vector paint tools, color correction and so much more.etc

3D Camera Tracker

The integrated Camera Tracker in NukeX® and Nuke Studio replicates the motion of camera of the shot. you can composite 2D/3D elements accurately with reference to the original camera used for the shot. It has more Refinement options, advanced 3D feature preview and lens distortion handling to improve efficiency and accuracy on the trickiest tracking tasks.With these tools we can maximize our 3D workflow

Nuke provides a wide range of keyers such as Primatte, Ultimatte ,IBK, as well as Foundry's Keylight®.

Nuke’s flexible and robust tool-set empowers teams to create pixel-perfect content every time.

Advanced compositing tools

Nuke’s Deep compositing tools providing the best support to reduce the need to re-rendering CGI elements when we modify the content ..

Nuke supports phython scripting

With very little programming knowledge you can make widgets and scripts using Python scripting in Nuke.

Tracking is easy

Nuke's 2D tracker and 3d camera tracker has a variety of options to make the tracking easier . It is possible to import tracking data from other softwares like mocha.

Nuke offers support for the leading industry standards including OpenEXR and rising technologies including Hydra and USD with support for OpenColorIO and ACES,color management is easy and ensures consistent color from capture through to delivery.

Vikram Kulkarni — Senior Compositor, Double Negative

Nuke has made possible things we couldn't have imagined doing in compositing. There is not a single project where we don't need to use its 3D pipeline for ease.I cannot thank Foundry enough for making comping so exciting!

Kindly search dcstechie yash to see this blog on google search.Watch this video to learn more